Levenshtein Distance and Distance Similarity Functions

Russian scientist Vladimir Levenshtein discovered the Levenshtein Distance algorithm in 1965. The algorithm produces the number of edits (i.e., insertions, deletions, and substitutions) required to change one string into the other. Consider the distance between “Steven” and “Stephen”:

Step-1: Substitute "v" for "p"Step-2: Insert "h" after the "p"

It takes two edits to change “Steven” to “Stephen”. The distance is 2.

The distance similarity expands on the distance algorithm by creating a percentage from the number of edits (distance algorithm’s output). The percentage indicates how similar the words are to one another. We needed two edits to change Steven to Stephen in the example above. That could be expressed by saying Steven is 71% similar to Stephen.

It’s calculated with the following formulae:

[distance_similarity] = 100 - ([distance] / [length_of_longest_string] * 100)Apache Spark includes an implementation of the Levenschtein Distance function. To implement the distance similarity, your code needs to perform the extra calculations:

## Import the `levenshtein` function

from pyspark.sql.functions import levenshtein

## Creating a single data from with the values I want to compare

df0 = spark.createDataFrame([('kitten', 'sitting',)], ['l', 'r'])

## Let's compare the two values...

df0.select(levenshtein('l', 'r').alias('d')).collect()The following list of name pairs can be enriched to provide their distance similarity.

foo,bar Suzie Chappman,Suzy Chappman Billy Wonka,William Wonka Kelly Schmitt,Kelly Schmitt Devon Parker,Devon Jones Kylie LeBlanc,Stacy LeBow

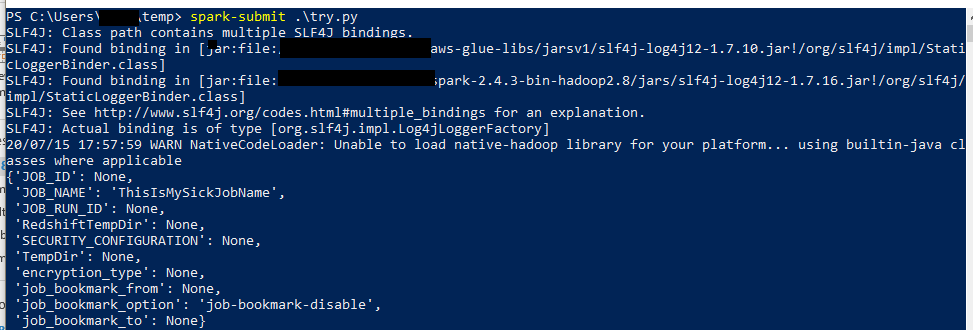

In the following, we use PySpark to establish the similarity between each pair of names:

from pyspark.sql import SparkSession

from pyspark.sql.functions import levenshtein, length, when, col

from pyspark.sql.types import IntegerType

def spark_session_get():

try:

spark

except NameError:

app_name = "Sketch distance similarity between two words"

spark = SparkSession.builder.appName(app_name).getOrCreate()

return spark

def source_get(source_file_path, format='csv', header=True):

return spark.read.load(source_file_path, header=header, format=format)

def source_enrich_distance_similarity(source_raw):

source_with_distance = source_raw.withColumn('customer_foo_size', length(source_raw.SAR)) \

.withColumn('customer_bar_size', length(source_raw.PAR)) \

.withColumn('distance', levenshtein(source_raw.SAR, source_raw.PAR))

return source_with_distance \

.withColumn( 'similarity', 100 - (col("distance") / when(col("customer_bar_size") >= col("customer_foo_size"), col("customer_bar_size") ).otherwise(col("customer_foo_size")) * 100))

spark = spark_session_get()

source_file_path = './sample.csv'

source_raw = source_get(source_file_path)

source_enriched = source_enrich_distance_similarity(source_raw)

source_enriched.show()Analysts can build on this example to establish the similarity between various words and phrases.